Stop the Pilot Panic: Your 4-Step Guide to Proving Tangible ROI on Enterprise Gen AI

The AI Boom Is Real — But So Is the Hangover

Artificial Intelligence was pitched as capitalism’s next golden goose — capable of writing our emails, reinventing customer service, and transforming industries.

But as we near 2026, the goose looks more like a very expensive pigeon.

A recent MIT study (as discussed in (https://www.youtube.com/watch?v=Zsh6VgcYCdI)) revealed that ~95% of enterprise AI pilots fail to deliver measurable ROI.

Budgets get burned on “AI experiments” that look exciting but rarely move the business needle.

As one executive put it, “Our dashboards are busy, but the bottom line stays flat.”

At SharkAI Solutions, we help organizations break this cycle through a structured, ROI-first execution framework — with Human-in-the-Loop (HITL) embedded in every stage to ensure transparency, accuracy, and real-world adoption.

Why Pilots Fail (Patterns We See Repeatedly)

- No ROI Definition: Pilots often chase model accuracy, not business value.

- Data Immaturity: Siloed or messy data leads to inconsistent insights.

- Build-Over-Buy Bias: Many teams build custom solutions that go obsolete before deployment — even though, as the video notes, companies buying specialized AI tools succeed twice as often.

- Governance Gaps: Missing privacy and audit guardrails undermine trust.

- No Feedback Loop: Without human oversight or iteration, pilots drift off course.

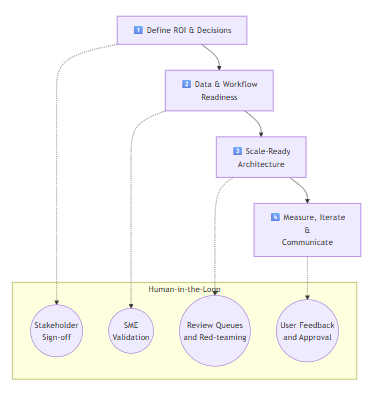

The 4-Step Framework to Proving Tangible ROI

(with Human-in-the-Loop at Every Stage)

Step 1 — Define ROI & Decision Pathways (Before Models)

Goal: Connect the pilot to measurable business outcomes, not model metrics.

-

What We Do

- Identify one clear business job-to-be-done — e.g., “Reduce case resolution time by 25%,” “Cut warranty claims by 15%,” or “Boost agent efficiency by 10%.”

- Map the current → desired → AI-assisted state with clear KPIs.

- Create acceptance criteria based on time, cost, or risk impact.

-

HITL in Step 1

- Bring real users and managers into ROI workshops to validate success criteria.

- Conduct sign-off checkpoints before development begins.

Output: A problem statement tied to ROI, acceptance criteria, and measurable business KPIs.

(Also see our related post: Build vs. Buy AI: Choosing Between Custom and Off-the-Shelf Solutions)

Step 2 — Data & Workflow Readiness (Trust Before Demos)

Goal: Build a trustworthy foundation of clean, compliant data before tuning models.

-

What We Do

- Consolidate structured and unstructured data sources for RAG pipelines.

- Implement data governance, PII scrubbing, and retention controls.

- Define guardrails and observability metrics for data flow, latency, and drift.

-

HITL in Step 2

- Data stewards verify sample accuracy and label integrity.

- SMEs assess retrieval quality before it powers downstream AI.

Output: A secure, auditable, and reusable enterprise data layer ready for AI integration.

(For deeper insights, read Unlocking Data Insights with AI: Why Indian Industries Must Act Now)

Step 3 — Architect for Scale, Governance & Flexibility

Goal: Build pilots as scalable prototypes, not throwaway experiments.

-

What We Do

- Design modular architectures integrating:

- RAG (Retrieval-Augmented Generation) for factual accuracy.

- LLMOps for monitoring, evaluation, and compliance.

- Graph layers & APIs for structured reasoning and interoperability.

- Vendor-agnostic model gateways to avoid lock-in.

- Ensure multi-cloud portability and policy-based access controls.

- Design modular architectures integrating:

-

HITL in Step 3

- Establish review queues for sensitive outputs.

- Run red-team sessions to test model safety and hallucination resistance.

Output: A production-ready pilot designed for easy scaling, not rework.

(Learn more about architecture in Future-Proof AI Systems: Building Scalable, Compliant AI Foundations)

(Also see: LLMOps: The Backbone of Enterprise-Ready AI)

Step 4 — Measure, Iterate & Communicate Impact

Goal: Prove ROI through transparent metrics and stakeholder alignment.

-

What We Do

- Build executive dashboards linking AI metrics (latency, inference cost) to business KPIs (SLA, AHT, defect rate).

- Run A/B rollouts (pilot → partial → full) with rollback safeguards.

- Establish continuous evaluation pipelines for drift and bias.

-

HITL in Step 4

- Human validation for low-confidence or high-risk results.

- In-app feedback (“good answer/bad answer”) to improve retraining datasets.

- Weekly ops reviews for governance and transparency.

Output: A measurable ROI story backed by real data and user trust.

Case Snapshot: From “Endless Pilot” to Enterprise Value

A manufacturing client launched a Gen AI pilot to summarize maintenance logs — it worked in demos but failed in production:

- Inconsistent data.

- No integration with ERP.

- Compliance risks.

- No measurable ROI.

Our Approach:

- Redefined goal → Reduce Mean Time to Repair (MTTR) by 25%.

- Built a RAG layer over verified records, with HITL validation.

- Deployed a scale-ready stack with model routing and governance.

- Measured ROI through MTTR dashboards and compliance audits.

Results:

- MTTR reduced by 60% within two quarters.

- Full auditability for compliance.

- Scaled across three plants with measurable cost savings.

What Leaders Gain from This Approach

- Faster ROI validation — clear link between AI outcomes and business goals.

- Trust and accountability — built-in human oversight at every stage.

- Future-proofing — scalable, compliant, and vendor-agnostic design.

- Adoption and credibility — proven value before full rollout.

(Explore related thinking in How SharkAI Solutions Helps Businesses Unlock the True Potential of AI)

Ready to Replace Pilot Panic with Proof?

AI success isn’t about how many experiments you run — it’s about how quickly you prove value and scale it responsibly.

SharkAI Solutions helps enterprises move from proof-of-concept to proof-of-impact, with measurable ROI, human oversight, and enterprise-grade design.

📌 Contact us today to turn your pilot into a production-ready success story.