LLMOps: The Backbone of Enterprise-Ready AI

Large Language Models (LLMs) are reshaping industries — from automating customer support to powering intelligent analytics. But moving from a proof-of-concept demo to a production-grade AI system isn’t simple.

That’s where LLMOps (Large Language Model Operations) comes in.

What is LLMOps?

LLMOps is the discipline of managing the lifecycle of large language models in production. It extends traditional MLOps by focusing on the unique challenges of LLMs:

- Prompt management: Ensuring consistency and effectiveness across teams.

- Evaluation: Measuring hallucinations, accuracy, and fairness.

- Compliance: Protecting sensitive data under GDPR, HIPAA, or industry-specific rules.

- Monitoring: Tracking latency, cost, and usage drift in real time.

- Governance: Ensuring accountability through logs, audit trails, and access controls.

In short, LLMOps turns experimental prototypes into enterprise-grade systems that scale, comply, and adapt to evolving business needs.

Why LLMOps Matters

Without LLMOps, AI projects risk failure due to compliance violations, scaling issues, or unpredictable model behavior.

For example, when we built a Virtual Health Assistant on Azure, early prototypes sometimes generated unsafe treatment suggestions. By embedding LLMOps pipelines — including evaluation checks, audit trails, and human-in-the-loop reviews — we ensured responses were safe, compliant, and useful before deployment.

Key Components of LLMOps

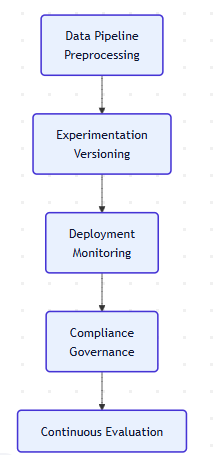

LLMOps covers the entire operational lifecycle:

1. Data Pipeline & Preprocessing

- Collect, clean, and structure raw inputs.

- Example: In the health assistant, we anonymized patient queries before feeding them into the model, ensuring compliance with healthcare data laws.

- Building robust pipelines often begins with extracting and structuring your organization’s own content sources — one approach is explained in our post on Extract Website Content for RAG, Context Engineering, and AI Pipelines.

2. Experimentation & Versioning

- Track different prompts, models, and tuning runs.

- Each trial of the health assistant’s medical dialogue flows was versioned, so clinicians could compare accuracy and safety outcomes.

3. Deployment & Monitoring

- Push models into production while tracking usage, latency, and costs.

- On Azure, we monitored not only uptime but also hallucination rates, ensuring the assistant avoided unsafe medical advice.

4. Compliance & Governance

- Enforce role-based access, audit trails, and secure data retention.

- Only authorized doctors could review logs of sensitive patient conversations, preventing data misuse.

- Compliance and governance also extend to how AI systems are adapted for enterprise needs. For instance, as shown in our work on LoRA Fine-Tuning with Stable Diffusion, personalization and guardrails go hand-in-hand when deploying AI responsibly.

5. Continuous Evaluation

- Regularly assess accuracy, bias, and user satisfaction.

- In the health assistant, we built dashboards to track response correctness and reduce call-center escalation.

- Effective orchestration is central to LLMOps, ensuring models, prompts, and tools interact smoothly. We covered this in depth in our post on Integrating LangGraph with an MCP RAG Server for Smarter AI Agents.

LLMOps in Action: Real-World Impact

Different organizations leverage LLMOps differently:

- Enterprises: Governance and monitoring frameworks make AI adoption safe in regulated industries like finance, insurance, and healthcare.

- SMBs: A lighter LLMOps stack enables small firms to tap into cloud-based orchestration and monitoring without hiring large DevOps teams.

- Startups: By starting lean (e.g., lightweight prototypes with tools like Wav2Lip for video consultations) and scaling with LLMOps discipline, they avoid the pitfalls of rushing AI into production while still impressing investors.

Competitive Advantage of LLMOps

For our healthcare client, embedding LLMOps into the Virtual Health Assistant:

- Reduced call-center load by 30%.

- Improved patient satisfaction scores by making interactions natural and empathetic.

- Freed up clinicians to handle more complex cases while routine queries were automated.

This shows that LLMOps isn’t optional — it’s the difference between a demo and a dependable enterprise system.

Conclusion

As AI adoption accelerates, enterprises that invest in LLMOps will be the ones to scale safely, efficiently, and competitively. Whether in healthcare, finance, or customer service, structured operations transform AI from a risky experiment into a long-term asset.

🚀 Partner with SharkAI Solutions to implement LLMOps tailored to your business. Whether you’re in healthcare, finance, or manufacturing, we help you deploy AI responsibly, at scale.

Contact SharkAI Solutions today to start your enterprise AI journey.